Sigma Perception

Ensure your gen AI doesn’t just sound smart — it is smart

Large Language Models (LLMs) are confident — but not always correct. Without human oversight, they hallucinate. Sigma Truth embeds factual accuracy into your AI pipeline, using expert human annotators to verify, attribute, and validate the information your models generate or are trained on.

Build AI that’s safe, secure, and ethically grounded

Generative AI is powerful — but without the right safeguards, it can expose your organization to serious risk.

Sigma Protection ensures your models are built on ethically sourced data, rigorously tested for vulnerabilities, and compliant with global data privacy standards.

What We Deliver

Factual integrity you can trust

Sigma’s human-in-the-loop teams are the backbone of trust in generative AI. Our workflows guarantee that every claim, data point, or reference is grounded in reality — not just generated text.

Core workflows include:

- Ground truth verification: Identify what’s fact, not fiction.

- Attribution & sourcing: Trace claims back to credible references.

- Accuracy scoring: Define thresholds of factuality and apply them consistently.

- Factual rewriting: Correct and rework outputs to meet truth standards.

- Research-validate-rewrite loop: Deep human research followed by structured validation and final polishing.

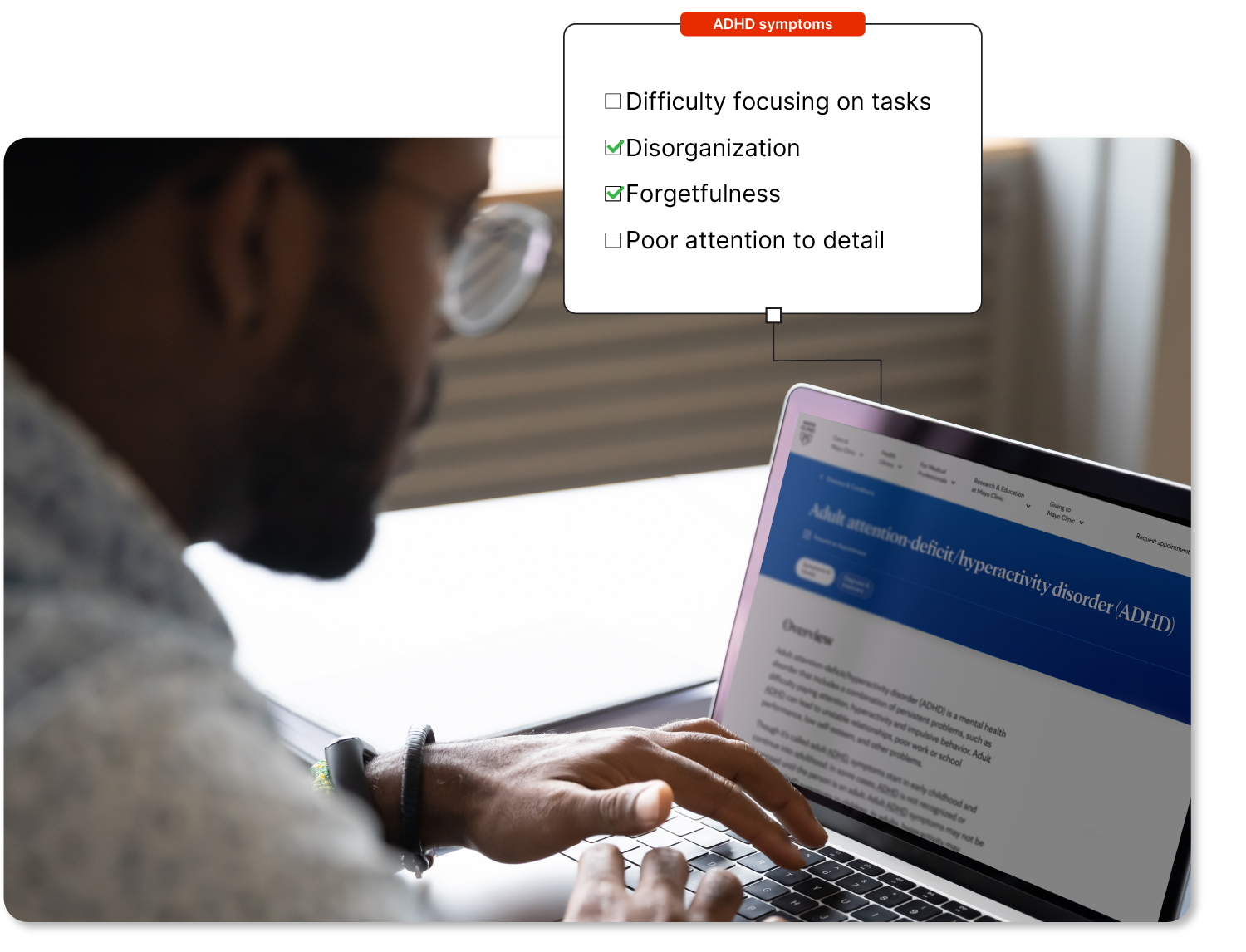

Example workflow

+ The artifact

For the question, “What are the symptoms of ADHD in adults?” the AI responded with a list.

+ The task

Compare the AI’s response to the Mayo Clinic website. Did the AI omit or misstate any key symptoms? If so, correct the response and cite your source.

+ The impact

This task requires the annotator to verify accuracy, relevance, and tone using real-world research — ensuring the model doesn’t hallucinate medical advice.

HOW WE DO IT

Human expertise at the core

- Vet data against primary and reputable sources.

- Correct inaccuracies before they reach your users.

- Apply domain-specific knowledge (e.g., medical, legal, scientific) where accuracy stakes are high.

- Partner with your teams to define truth standards tailored to your application.

Why it matters

Without truth, AI is a liability

AI that “sounds right” but is wrong erodes user trust, exposes your brand to reputational risk, and can trigger legal or ethical fallout. The cost of inaccuracy compounds fast — and LLMs won’t catch it.

Investing in Truth means:

- Lower hallucination rates

- Higher user trust

- Better model performance

- Enterprise-grade AI readiness

Discover the new standards for AI quality

Discover the new standards for AI quality

Traditional AI chased 99.99% accuracy — but gen AI demands nuance, judgment, and creativity. Learn the 10 new markers for quality human data annotation that power smarter, safer LLMs.

Ready to learn more?

Get in touch with our sales team to learn more about what Sigma Truth can do for your business.

Our other services

Meaning

Learn about Sigma Meaning

Integration

Teach your model to think in context. We support multimodal labeling, prompt engineering, iterative refinement, and RLHF — ensuring your AI reasons, not just reacts.

Learn about Sigma Integration

Perception

Align outputs with human expectation. We annotate tone, intent, and emotion — and run side-by-side evaluations to help your models speak with empathy, not just accuracy.

Learn about Sigma Perception

Data

Start strong with the right training data. We source, collect, annotate, and synthesize diverse datasets that reflect real-world complexity and reduce downstream failure.

Learn about Sigma Data

Protection

Build AI that’s safe by design. Our teams detect and remove PII, enforce data compliance, and perform red teaming to expose vulnerabilities before attackers do.

Learn about Sigma Protection