Scaling generative AI: Benefits, risks, and limitations

Before generative AI, traditional AI technology focused on solving well-defined problems. These traditional AI models were designed for specific tasks, such as text classification, entity extraction, and predictive modeling, limiting business applications to narrow domains.

A glimpse back at McKinsey’s State of AI in 2022 reveals popular enterprise AI use cases, such as optimizing service operations, customer service analytics, and risk modeling.

However, generative AI shuffled all the cards, opening a new frontier: the ability to create something new that resembled its training data. Instead of identifying patterns and making predictions, gen AI is now able to project beyond the limits of its training set, generating original content in the form of text, images, audio, video, and more.

Gen AI’s applications are vast and diverse. In marketing operations, it can be used to create original content tailored to specific audiences in different tones or formats. In healthcare, it can analyze medical images, enabling faster and more precise interpretation of X-rays, CT scans, and MRIs.

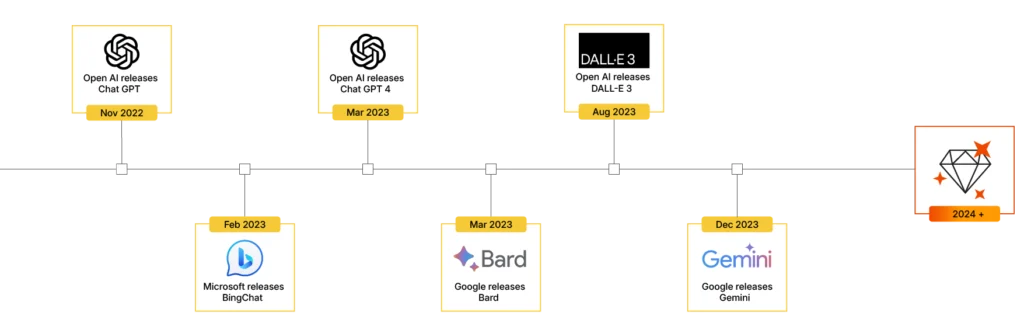

While 2023 was a year of hype, discovery, and understanding of gen AI’s capabilities, 2024 marked a significant shift as companies began transitioning from initial experimentation to larger-scale gen AI projects. But as businesses begin to reap the rewards of this transformative technology, there is also growing concern about its risks and impact on society.

The gen AI era is still in its early stages, but accelerating at a fast pace. According to Forrester’s May 2024 Artificial Intelligence Pulse Survey, “67% of AI decision-makers say their organization plans to increase investment in generative AI in the coming year.” Early adopters are focusing on speed and ROI while trying to address challenging aspects such as data quality and security.

“The mass adoption of generative AI has transformed customer and employee interactions and expectations. As a result, gen AI has catapulted AI initiatives from ‘nice-to-haves’ to the basis for competitive roadmaps.”

— Srividya Sridharan, VP and Group Research Director at Forrester

Let’s zoom in on the key factors shaping gen AI in 2024:

The big shift: From niche applications to being everywhere

The rise of generative AI and Large Language Models (LLMs) enabled widespread access to artificial intelligence technology. No longer confined to specific use cases, gen AI is now being infused into every aspect of the enterprise, transforming how work is done across all job roles.

A MIT Technology Review report from a 2022 survey showed that before gen AI, only 8% of respondents considered AI essential for at least three business functions. Now, foundation models enable new applications with less data, expanding gen AI across multiple tasks, including creative roles traditionally seen as human-centered.

As MIT Technology Review states, we are witnessing “the beginnings of truly enterprise-wide AI,” with gen AI capabilities integrated into daily workplace activities.

From hype to reality: Measuring the impact of gen AI on business

Companies in 2024 are shifting their focus from initial enthusiasm to a more careful assessment of gen AI’s potential, demanding tangible and measurable results from their investments, reveals a recent Deloitte report: State of generative AI in the enterprise.

According to the report, 42% of respondents identified improved efficiency, productivity, and cost reduction as the top benefits of their gen AI initiatives. This initial success is driving their plans to scale gen AI applications across more use cases. In fact, two out of three surveyed organizations indicated that they are “increasing their investments in generative AI because they have seen strong early value to date.”

Despite this, scaling gen AI beyond pilots and proofs of concept presents significant challenges. Deloitte’s report indicates that 68% of organizations have deployed less than a third of their gen AI experiments into production.

Early adopters are seeing the benefits of gen AI, but also its risks

While gen AI offers untapped potential, it also presents a range of risks with uncertain long-term implications. A recent McKinsey interview found that, while 63% of respondents see gen AI as a “high” or “very high” priority, 91% of those respondents don’t feel “very prepared” to implement gen AI in a responsible way.

The primary concerns among companies regarding generative AI include:

- Hallucinations (inaccurate information)

- Data privacy and ownership issues

- Amplifying existing bias

- Legal risks such as plagiarism or copyright infringement

- Spreading misinformation

- Lack of transparency and explainability

Many of these concerns hinge on the quality of the data used to train gen AI systems. Gen AI can only replicate or amplify the biases and inaccuracies present in its training data. This emphasizes the importance of creating reliable, diverse, accurate, and unbiased training data.

It also highlights the need for human supervision to train gen AI models. As Matt McLarty, CTO at Boomi, says in MIT Technology Review’s Playbook for Crafting AI Strategy: “We need to have lots of human guardrails in the picture.”

Scaling gen AI: Challenges and AI-readiness gaps

Despite initial success, early adopters are facing significant limitations to scaling beyond pilot programs. Gartner predicts that by the end of 2025, 30% of generative AI projects will be abandoned after proof of concept. The main reasons?

- Poor data quality and infrastructure limitations

- Inadequate risk controls and governance issues

- Unclear business value

Similarly, a MIT Technology Review report shows that 49% of surveyed organizations cited poor data quality as the most significant barrier to implementing AI, especially among companies with revenue of over US$10 billion.

A well-structured data management strategy is key for successfully scaling generative AI projects. This requires a balanced approach that involves processes, talent, data, and technology. However, Cisco’s AI Readiness Index highlights a significant gap, as only 14% of global organizations are fully equipped to integrate AI into their businesses.

This becomes even more concerning for generative AI applications, which demand high-quality, diverse, and accessible data.

Moreover, to support gen AI and machine learning initiatives, companies require substantial computational power and an adequate infrastructure. Cisco’s findings reveal that only 13% of IT leaders believe their networks can adequately handle the computational demands of these technologies.

How businesses are adopting gen AI solutions

When it comes to developing gen AI capabilities, businesses take various approaches. A McKinsey report identifies three archetypes:

- Takers: Businesses consume preexisting services through APIs or other basic interfaces. This is the most simple in terms of infrastructure needs. Off-the-shelf solutions that can get up and running in little time are good examples of this approach.

- Shapers: Organizations access existing models and fine-tune them with internal company data. This approach is suitable for companies looking to scale AI capabilities by integrating their own data into foundation models, which are massive models designed to perform general and varied tasks.

- Makers: Companies build their own foundation models from scratch to address specific use cases. This is the most complex and expensive approach, requiring loads of data, extreme amounts of computing power, and deep expertise.

In the same vein, KPMG research indicates that only 12% of companies are building gen AI models in-house. Half are buying or leasing from external vendors, while 29% implement “a mix of building, buying, and partnering.”

“With gen AI, we are moving from a binary world of ‘build vs. buy’ to one that might be better characterized as ‘buy, build, and partner,’ in which the most successful organizations are those that construct ecosystems that blend proprietary, off-the-shelf, and open-source models.”

— McKinsey, “Beyond the hype: Capturing the potential of AI and gen AI in tech, media, and telecom”

Companies choose their approaches based on various factors, such as their overall goals, the complexity of their business use case, the desired level of control over training datasets, and the scale of their project, among others.

However, one thing is true: At least for now, only a few will adopt the Makers archetype. But that’s not necessarily a disadvantage.

50% buy/lease from vendors

29% build, buy, or partner

12% build in-house

Source: KPMG, “Gen AI survey 2024”

“Most organizations are not going to build large language models, which are expensive and require a massive amount of infrastructure,” said Matt McLarty, CTO at the software company Boomi, in the MIT report. “Instead, they need to become experts in applying the new technologies in their own business context. The companies that don’t put the AI cart before the business problem horse are going to be better positioned.”