Building LLMs with sensitive data: A practical guide to privacy and security

Know your data: what “sensitive” means in practice Why this matters for LLMs: leakage is real Modern models can memorize and later regurgitate rare or sensitive strings from training corpora. Research has demonstrated the extraction of training data from production LLMs via carefully crafted prompts, and a growing body of work on membership-inference risks. The […]

When “uh… so, yeah” means something: teaching AI the messy parts of human talk

A quick primer: what’s what (and why it matters) Signals, not noise: disfluency carries meaning A sentence like, “I — I can probably help … later?” encodes hesitation, caution, and weak commitment. If ASR or cleanup filters strip stutters, filler, or rising intonation, downstream models may over-state confidence. Annotation pattern Example “That’s a whole — […]

Bias detection in generative AI: Practical ways to find and fix it

Protection: Adversarial testing surfaces unfair behavior Common bias patterns: Prompt-induced harms (e.g., stereotyping a profession by gender), jailbreaks that elicit unsafe content about protected classes, or unequal refusal behaviors by demographic term. How to combat it: Run red-teaming at scale with targeted attack sets: protected-class substitutions, counterfactual prompts (“they/them” → “he/him”), and policy stress tests […]

FAQs: Human data annotation for generative and agentic AI

What is human data annotation in generative AI? Human data annotation is the process of labeling AI training data with meaning, tone, intent, or accuracy checks, using expert human reviewers. In generative AI, this helps models learn to produce outputs that are truthful, emotionally appropriate, localized to be culturally relevant, and aligned with user intent. […]

Sigma AI defines new standards for quality in generative AI

As enterprises face increased risk from hallucinations and misinformation, Sigma Truth evolves benchmarks beyond accuracy PRESS RELEASE: MIAMI – September 2, 2025 – Sigma AI, The Human Context Company and a global leader in human‑in‑the‑loop data annotation, today announced new standards for evaluating and improving the quality of generative AI outputs. As enterprises rapidly adopt […]

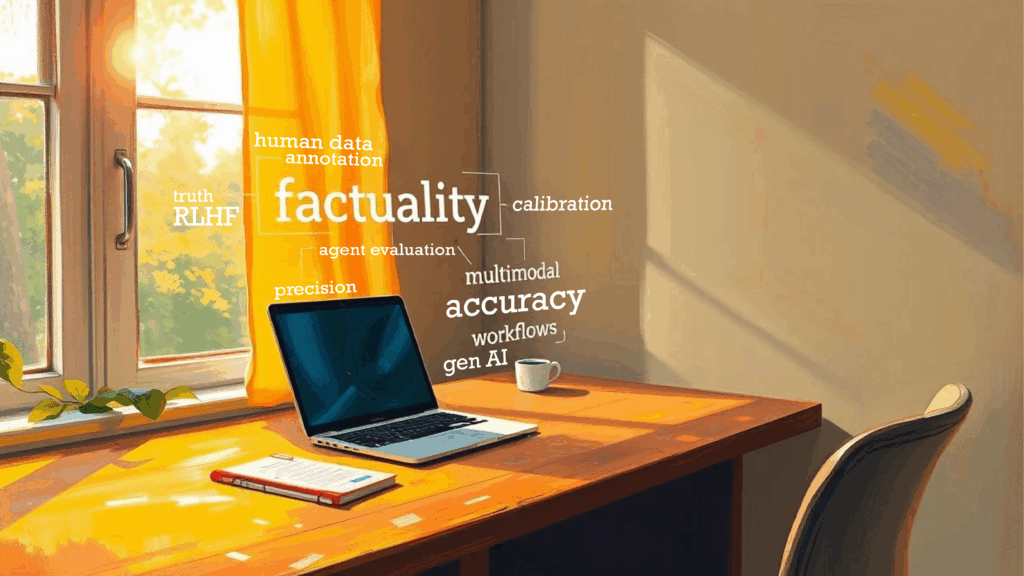

Generative AI glossary for human data annotation

Agent evaluation The process of assessing how well an AI agent performs its tasks, focusing on its effectiveness, efficiency, reliability, and ethical considerations. Example: An annotator reviews a human-agent AI interaction, determining whether the person’s needs were met, and whether there was any frustration or difficulty. Attribution annotation Labeling where facts or statements originated, such […]

Why inter‑annotator agreement is critical to best‑in‑class gen AI training

What is inter‑annotator agreement (IAA) and why is it important? IAA measures how consistently multiple annotators label the same content. It helps quantify whether annotation guidelines are clear and whether annotators share a reliable understanding. Common metrics: Even seasoned experts often show α = 0.12–0.43 in high‑subjectivity tasks like emotional attribute scoring, especially before refining […]

Why gen AI quality requires rethinking human annotation standards

From accuracy to agreement: A new lens on quality Traditional AI annotation tasks (e.g. labeling a cat in an image) tend to yield high human agreement and low error rates. Annotators working with clear guidelines often achieve over 98% accuracy — sometimes even 99.99% — especially when backed by tech-assisted workflows. But these standards don’t […]

Human insight in data annotation: Training creative gen AI

Insight in gen AI data annotation Traditional AI focused on pattern recognition and classification tasks, based on clearly defined labels. But generative AI brought a paradigm shift, striving to emulate human creativity and expertise. This requires a different approach to training data. Human annotators have evolved from labelers to insightful collaborators, enriching the data with […]